AI & Equity: Unveiling Bias and Building Bridges

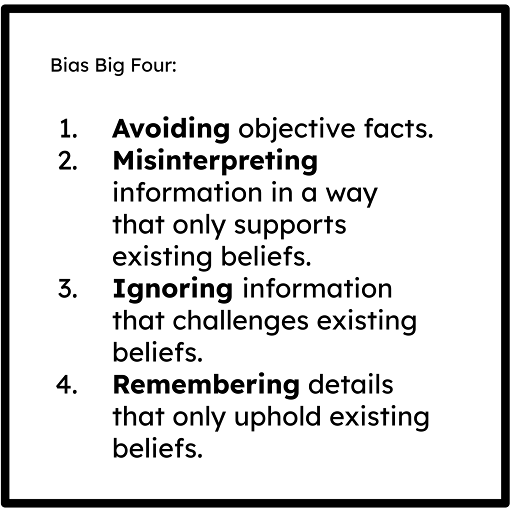

We've been talking about AI in education, its potential, and the pitfalls to avoid. But one crucial conversation often gets swept under the rug: bias. We hear whispers of "identifying bias" or "ethical AI," but rarely do we get concrete tools to tackle these complex issues.

That's where my "A.M. I. R.ight?" framework comes in. Which, if any, of the following apply to you:

These simple yet powerful prompts help us confront our own biases, the unseen baggage we carry around. It's the essential first step before we even think about AI bias. But how do we move from self-awareness to identifying bias in AI outputs? The answer lies in a brutal mirror: how vigilant are we about spotting bias in ourselves? Can we sniff out our own prejudices against certain people, ideas, or even articles we mistake for truth? Do we lean towards or against things without digging deeper, unwilling to be proven wrong?

Only then can we truly begin to see the bias woven into AI output. Because let's face it, these tools are trained on data sets, and data sets are inherently flawed. They're built on the biases of the people who created them, reflecting the inequalities and blind spots of our world.

So, what can we do? How can we leverage AI's power without perpetuating harmful inequities? Here are some keys:

- Become a bias detective: Develop critical thinking skills to question everything, including AI outputs. Don't blindly accept what machines tell you.

- Seek diverse perspectives: Expose yourself to voices and viewpoints different from your own. Challenge your assumptions and expand your understanding of the world.

- Demand transparency: Ask how AI tools were trained, by whom, and on what data. Look for red flags of bias and advocate for fairer data sets.

- Embrace continuous learning: This journey is never-ending. Stay updated on the latest research on AI bias and keep refining your own ability to identify and combat it.

And for those who want to delve deeper, there's more:

- My first book, Demarginalizing Design, explores how bias infiltrates design and how we can create more inclusive solutions.

- My upcoming co-authored book with Ken Shelton tackles the very topic of ethical and equitable AI use (title TBD). Stay tuned for updates!

Let's not let AI become another tool that perpetuates inequalities. By acknowledging our own biases, critically examining AI outputs, and demanding fairer data sets, we can build a future where technology empowers, not marginalizes. Together, we can bridge the gap and create a truly equitable AI landscape in education and beyond.

Remember, the fight against bias starts with awareness and action. Let's embark on this journey together, with open minds and critical hearts.

Speaking of transparency, yes, I did indeed use AI to help edit this post based on my own words. Below is a sample prompt all educators and students should utilize:

Act like a copyeditor and proofreader and edit this manuscript according to the Chicago Manual of Style. Focus on punctuation, grammar, syntax, typos, capitalization, formatting and consistency.

Dee is the author of Demarginalizing Design and a passionate and energetic educator and learner with over two decades of instructional experience on the K-12 and collegiate levels. Dee holds Undergraduate and Master’s degrees in Sociology with special interests in education, race relations, and equity. Dee is an award-winning presenter, TEDx Speaker, Google Certified Trainer, Google Innovator, and Google Certified Coach that specializes in creative applications for mobile devices and Chromebooks, low-cost makerspaces, and gamified learning experiences. Dee is a founding mentor and architect for the Google Coaching program pilot, Dynamic Learning Project, and a co-founder of Our Voice Academy, a program aimed at empowering educators of color to gain greater visible leadership and recognized expertise. Dee is also the creator of the design thinking educational activities called, Solve in Time!® and Maker Kitchen™️ and co-host of the Liberated Educator podcast. Dee practices self-care by reading, playing percussion, and roasting, brewing, and drinking coffee.